The ZMDK Chronicles

Dive into a realm of news and insights with 0396zmdfk.

Machine Learning: When Algorithms Dream of Electric Sheep

Discover the mind-bending world of machine learning where algorithms dream of electric sheep and transform our understanding of intelligence!

Understanding the Basics: How Machine Learning Transforms Data into Knowledge

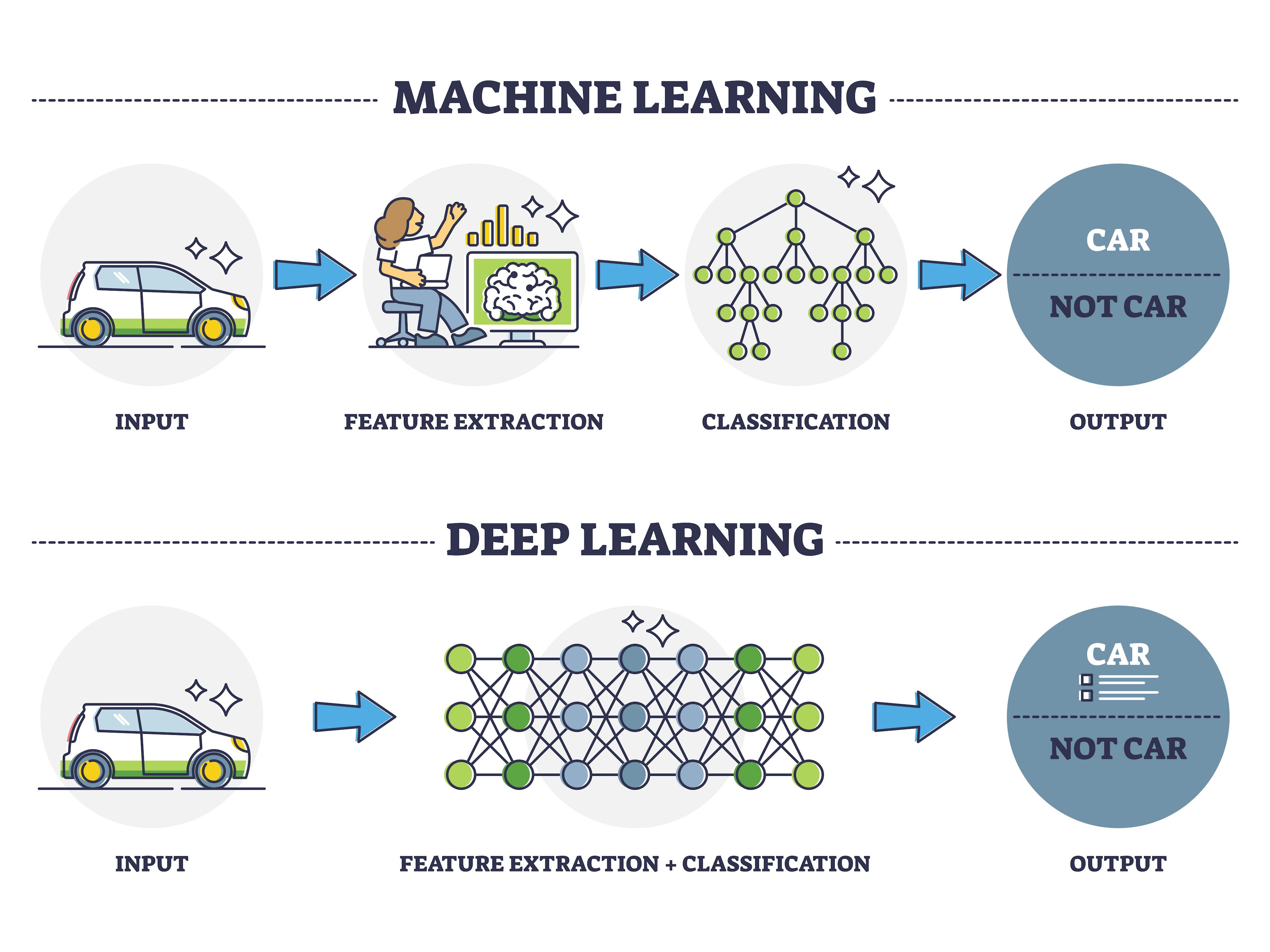

Machine Learning (ML) is a subset of artificial intelligence that enables systems to learn from data and improve their performance over time without being explicitly programmed. By utilizing algorithms and statistical models, machine learning transforms raw data into actionable insights. This process typically involves three main steps: data collection, data processing, and model training. Understanding these basics allows organizations to harness the potential of their datasets effectively, leading to improved decision-making and a competitive edge in their respective industries.

One of the most fascinating aspects of machine learning is how it can identify patterns and correlations in large sets of data that might not be immediately apparent to human analysts. For instance, through a technique known as clustering, ML can group similar data points together, while regression analysis can predict future outcomes based on historical data. As businesses increasingly rely on data-driven strategies, embracing machine learning becomes essential for transforming data into knowledge and enabling more informed strategic planning.

The Ethics of AI: Do Algorithms Have a Moral Compass?

The rapid advancement of artificial intelligence (AI) has sparked a debate about the ethical implications of algorithms and their decision-making processes. As machines become increasingly capable of performing tasks traditionally reserved for humans, the question arises: do algorithms possess a moral compass? AI systems operate on data-driven models, often reflecting the biases and values of the data they are trained on. This raises concerns about fairness, accountability, and the potential for perpetuating injustice. Without proper guidelines and oversight, algorithms can make decisions that adversely affect individuals and communities, leading to ethical dilemmas that challenge our understanding of responsibility.

When discussing the ethics of AI, it's essential to consider the role of human oversight. While AI can analyze vast amounts of data quickly and efficiently, it lacks the emotional intelligence and contextual understanding that humans possess. Regulating AI technology is crucial to ensure that these systems operate within a framework that values ethics and social responsibility. Establishing ethical guidelines for AI development and implementation can help to mitigate risks associated with biased decision-making, privacy violations, and other concerns. Ultimately, while algorithms may not inherently have a moral compass, it is our responsibility to imbue them with ethical principles to guide their use in society.

How Are Machine Learning Algorithms Designed to Learn and Adapt?

Machine learning algorithms are designed with the fundamental aim of learning from data, adapting their behavior based on the input they receive. This process typically begins with the selection of a model, which is essentially a mathematical representation of the data. The model is then trained using a dataset, where it identifies patterns and relationships within the data. This training phase involves the adjustment of parameters through optimization techniques, allowing the algorithm to minimize error in its predictions. Key methods such as supervised learning, unsupervised learning, and reinforcement learning all play crucial roles in how these algorithms refine their accuracy and effectiveness over time.

As the model encounters new data, it continues to learn and adapt dynamically. This adaptability is achieved through techniques like online learning and transfer learning. In online learning, the algorithm updates itself incrementally as new examples are provided, thus remaining relevant in real-time applications. Transfer learning allows an algorithm trained in one context to be repurposed for another, leveraging prior knowledge for improved performance. Overall, the design of machine learning algorithms embodies a complex interplay of statistical methods and computational techniques, enabling continuous enhancement and adaptation to evolving datasets.